Why seeing must be believing – AI, computational imaging and the battle against deepfakes

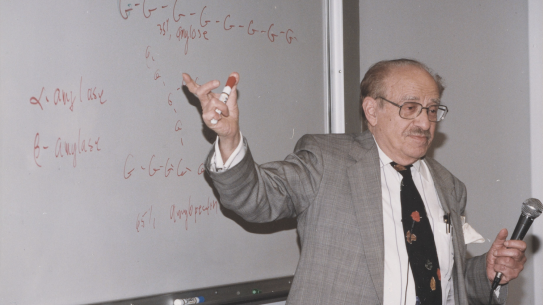

Nasir Memon, Vice Dean for Academics and Student Affairs, professor of computer science and engineering, and co-founder of the NYU Center for Cybersecurity, writes that the emergence of AI-powered deepfake technologies that can enable cybercriminals to bypass biometric security protections, commit synthetic identity fraud, engage in cyber-bullying and blackmail, and exaggerate or falsify personal achievements "represents a serious cybersecurity threat at a time when people are more likely to be influenced by images and less likely to critically assess them."

Memon explains that the rise of sophisticated digital technologies that can alter visual and aural inputs in a way that can seamlessly change their meaning has caused tasks as simple as declaring an image ‘real’ or a video ‘accurate’ to become surprisingly difficult.

To help solve this challenge he began a project two years ago with Pawel Korus, research assistant professor and member of NYU’s Center for Cybersecurity, to devise a camera system that essentially embeds a digital "watermark" — a string of code in a piece of digital media — to verify an image's authenticity. "If a party attempts to alter the image, the watermark will break,” he writes.

Memon said his group is working on “inserting digital fingerprints right at the point the image is first created, providing for an additional means of testing media authenticity. These fingerprints are dynamic and can be generated on the fly based on a secret key and the photo’s authentication context, such as location or timestamp information, which is typically housed in a database.”